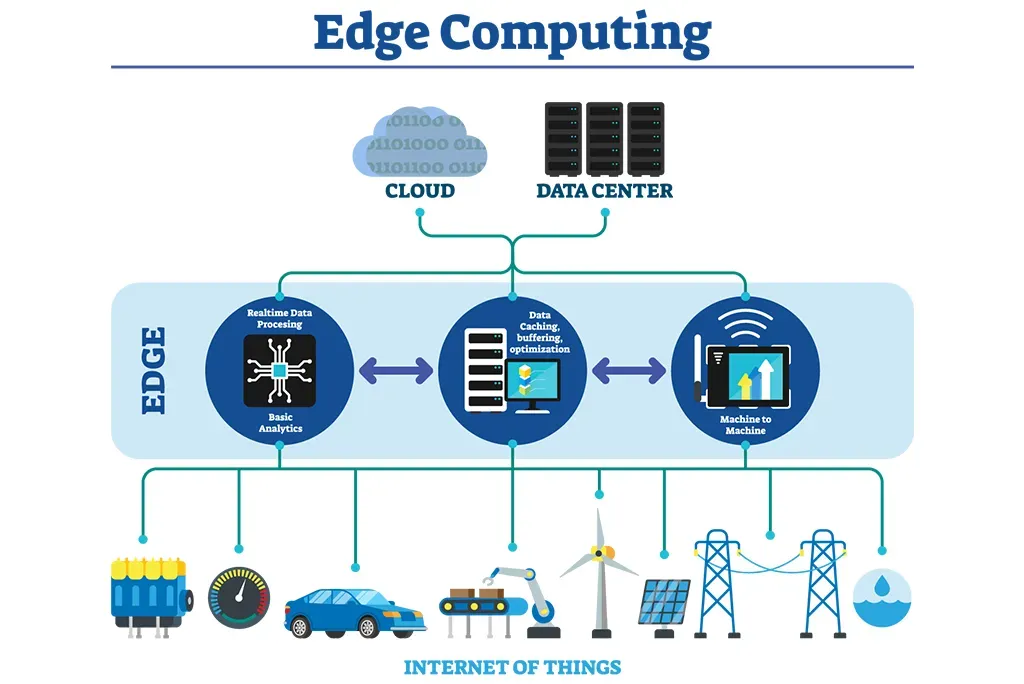

AI to Edge Computing is redefining where organizations deploy intelligence, shifting decision-making from centralized clouds toward devices, gateways, and local servers so insights can be generated and acted upon closer to the data source, foreshadowing a future where latency-sensitive workloads respond in milliseconds instead of seconds. From manufacturing floors to rural clinics, this paradigm shift unfolds as AI at the edge empowers smarter equipment, downstream analytics, and autonomous operations while concurrently improving data privacy and reducing backhaul requirements. With 5G connectivity and pervasive IoT deployments, edge computing enables real-time analytics at the edge, enabling near-instant anomaly detection, proactive maintenance, and context-aware services that adapt to local conditions. Edge AI frameworks, TinyML, and specialized accelerators make models compact enough to run on microcontrollers and edge servers, while orchestration layers provide secure updates, policy enforcement, and fault tolerance. Ultimately, organizations gain resilience and scale by blending edge inference with selective cloud collaboration, creating architectures that respect data sovereignty, lower bandwidth costs, and accelerate time to value for diverse lines of business.

Viewed through a different lens, this trend is often described as edge intelligence, where AI processing sits closer to data sources to accelerate insight and action. You’ll also hear terms such as on-device inference, near-sensor processing, and distributed AI at the network edge, all signaling a shift away from sending every signal to the cloud. Architectures typically balance cloud-based training with edge-side inference, enabling models to learn and adapt while operating locally under strict latency and privacy constraints. As platforms mature, governance, interoperability, and secure execution become central to scaling across devices, gateways, and regional hubs. At its core, the goal remains the same: extract value where it matters most by combining local cognition with centralized coordination.

AI to Edge Computing: Real-Time Intelligence at the Edge

AI to Edge Computing brings inference, decisions, and even light training closer to data sources such as sensors, cameras, and robots. By deploying AI models on edge devices (edge AI) and leveraging TinyML on microcontrollers, organizations cut round-trip times to milliseconds, slash bandwidth costs, and improve data privacy. In IoT deployments, the edge processes streams locally, delivering near-instant real-time analytics and triggering actions without waiting for cloud round-trips. The combination of 5G connectivity and robust edge architectures makes this possible, enabling smarter devices that collaborate with central systems while maintaining sovereignty over sensitive data. This is the essence of AI at the edge: intelligence where it’s needed most.

Architectures for AI to Edge Computing include Edge-first patterns that keep critical inference at the edge, hybrid models with cloud updates, and cloud-centric flows that feed the edge. Real-time analytics engines at the edge ingest streams from cameras, sensors, and vehicles to produce timely insights and autonomous responses. Deployments rely on containerization, cloud-native or edge-native orchestration, secure updates, and hardware accelerators—AI chips, GPUs, and specialized edge processors—that optimize throughput within resource-constrained environments. The overall impact spans manufacturing, healthcare, logistics, and smart cities, where latency reduction and privacy preservation translate into measurable improvements in uptime, safety, and efficiency.

Edge AI and Real-Time Analytics for IoT-Driven Operations

Edge AI and real-time analytics empower IoT ecosystems to extract meaningful patterns on-device, from industrial sensors to patient monitors. As data flows from devices over 5G networks to edge nodes, local analytics detect anomalies, forecast events, and trigger automated workflows in near real time, all while minimizing data egress. This approach supports privacy-preserving processing and reduces cloud load, enabling scalable use cases in manufacturing, healthcare, and smart cities. The synergy of AI at the edge and edge AI accelerates decision-making when delays would be costly.

To implement this effectively, organizations should choose an architecture pattern that fits data sensitivity and latency targets—edge-first or hybrid—while building a scalable edge platform with lightweight runtimes and secure management. Emphasize governance, data lineage, and model provenance to track how insights are generated; enable federated learning to improve models without raw data leaving the edge; and maintain security with secure boot, attestation, and encryption in transit. Cross-domain collaboration among IT, data science, and operations is vital to maximize measurable outcomes from real-time analytics across IoT deployments using 5G and edge infrastructure.

Frequently Asked Questions

What is AI to Edge Computing and why is it important for IoT, 5G, and real-time analytics?

AI to Edge Computing is a tiered approach in which AI inference runs close to data sources—on edge devices or gateways—reducing latency, lowering bandwidth use, and improving privacy. By deploying edge AI and TinyML on IoT devices and edge servers, organizations can perform real-time analytics, detect patterns, and trigger actions locally. 5G connectivity unlocks high-bandwidth, low-latency links to edge nodes, enabling scalable edge workloads while preserving cloud-based training and model updates as needed.

Which architectural patterns best support AI to Edge Computing for IoT and real-time analytics, and how should you choose between edge-first, cloud-centric, and hybrid approaches?

The main patterns are: 1) Edge-first, where critical decisions occur at the edge and only summaries are sent to the cloud; 2) Cloud-centric, where edge devices feed data for centralized AI training and periodic updates; 3) Hybrid, combining edge inference with cloud-backed model refreshes. Selection depends on latency, data privacy, regulatory requirements, and bandwidth. Implement a scalable edge platform with lightweight runtimes, containerization, CI/CD, remote updates, monitoring, and strong security. Align with AI at the edge, IoT data flows, and real-time analytics to keep decisions fast and privacy-preserving.

| Aspect | Key Points |

|---|---|

| What AI to Edge Computing is | A tiered approach where inference and light training occur near the data source, reducing latency and bandwidth while enhancing data privacy. |

| Why it matters | Latency-sensitive applications (autonomous machines, predictive maintenance, real-time monitoring) benefit from near-instant insights; edge processing also supports data sovereignty and privacy goals. |

| Tech stack & enabling tech | Edge AI models (TinyML, quantization); cloud-native or edge-native platforms; containerization; accelerators (AI chips, GPUs); real-time edge analytics engines. |

| Connectivity | 5G and automotive-grade networks enable high-bandwidth, low-latency links; IoT data streams are filtered and summarized locally before cloud transmission. |

| Industry use cases | Manufacturing: predictive maintenance; Healthcare: remote monitoring and on-device decisions; Smart cities: traffic, safety, energy; Retail: edge CV for checkout; Automotive & logistics: on-vehicle routing and fleet insights. |

| Architectural patterns | Edge-first, Cloud-centric, Hybrid; cloud-native principles applied to edge contexts; emphasis on modularity and observability. |

| Enabling technologies | TinyML and compact neural networks; hardware accelerators; edge containers and lightweight orchestration; secure updates and trusted execution environments. |

| Benefits | Privacy-preserving processing; bandwidth optimization; resilience during outages; localized inference improves responsiveness. |

| Practical steps to start | Assess value and latency; choose architecture pattern; build scalable edge platform; optimize models for edge; prioritize security and privacy; cross-domain collaboration; governance and compliance; pilot, measure, and scale. |

Summary

AI to Edge Computing represents a foundational shift in how organizations deploy intelligent capabilities where it matters most. By moving inference, decision-making, and even light training toward the network edge, it reduces latency, lowers bandwidth use, and strengthens data privacy. This approach enables real-time analytics, resilient operation, and scalable architectures across manufacturing, healthcare, retail, and beyond. As edge hardware improves and federated learning evolves, AI to Edge Computing will become a standard component of modern digital infrastructure, harmonizing cloud-scale insights with local responsiveness. Embracing edge-first strategies helps organizations accelerate decision cycles, protect sensitive data, and optimize networks as data volumes grow.